|

Three questions crop up when working

with inexact rule-based models:

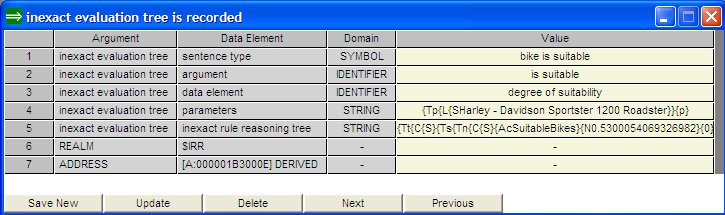

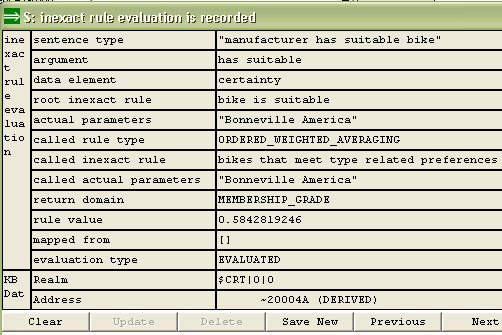

Tracking the use of inexact rules Pro/3 stores the actual result of using inexact rules as sentences in the KB. Two sentences types are generated i.e. inexact rule evaluation is recorded and inexact evaluation tree is recorded. The generation of the sentences is optional - refer to processing options. Inexact rule reasoning trees are based on the inexact evaluation tree is recorded-sentences. One tree is generated for each successful interpretation of a root-rule i.e. an inexact rule called from a sentence rule The sentences are used by the inexact rule reasoning network drawings generated by Pro/3. (They can also be listed or viewed as any other sentence, however this is not a practical way of evaluating the model). |

||||||||||||

|

|

||||||||||||

|

||||||||||||

RULE TREE FORMAT

|

||||||||||||

|

|

||||||||||||

|

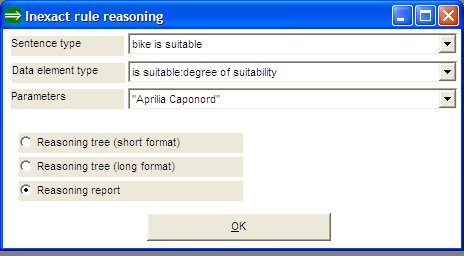

INEXACT RULE REASONING

REPORT The inexact rule reasoning report gives a detailed textual documentation of the reasoning carried out with the inexact rule network. One example from the MC model shows the general contents of the report. |

||||||||||||

|

||||||||||||

|

One inexact rule evaluation is recorded-sentence is generated for each interpretation of a certainty rule: |

||||||||||||

|

|

||||||||||||

Three evaluation-types are

indicated:

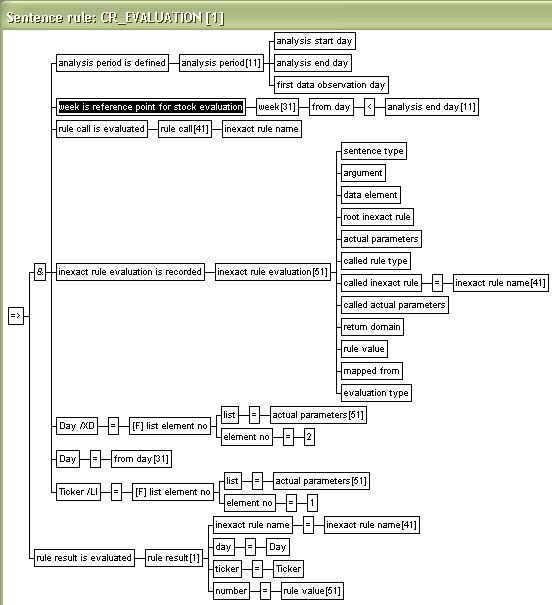

USING THE RULE EVALUATION SENTENCES The generated evaluation sentences can be listed and manually reviewed as any other Pro/3 sentences. It is practical in most cases to output the sentences to e.g. MS Excel via the 3DL-output format for this purpose. More sophisticated techniques for evaluating inexact rule models involve the creation of evaluation sub-models. This will involve sentence rule(s) for deriving new sentence types from the inexact rule evaluation is recorded-sentences. The following example illustrate the technique further. The example is from an expert system which suggests which are the "best" stocks to buy, "best" meaning the stocks which are anticipated to give the highest return over the next several months. The system attempts to validate its suggestion-logic, by generating similar advices as of one or more historical dates (based on information available on those dates), and subsequently correlating these advices with the actual returns for set of periods following these historical dates (called reference points in the model). Principally, certainty factors suggest a ranking of certainty rather than an absolute measure of certainty, and it is thus better to use the certainty factor ranking rather than the factor itself. The system also uses ranking of the returns rather than the actual returns to minimize distortions by exceptionally high or low returns.

|

||||||||||||

|

|

||||||||||||

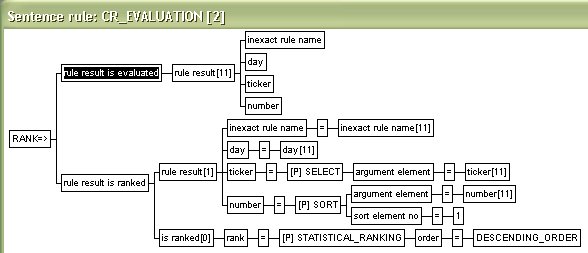

| The above rule unpacks the actual

parameters in the inexact rule evaluation is recorded-sentences with the

list

element no built-in function, for the purpose of (i) selecting only

some of the evaluation sentences by comparing it with the from day

data element in the week is reference point for stock evaluation-sentences;

and (ii) deriving a sentence type where day and ticker (corresponding to the first and the second actual parameter in the rule call)

are explicit and separate data element types. (Note that a

"ticker" is an identifier for a stock).

The rule result is evaluated-sentences can now be ranked such that each rule call is ranked according to their returned values on each evaluation day. |

||||||||||||

|

|

||||||||||||

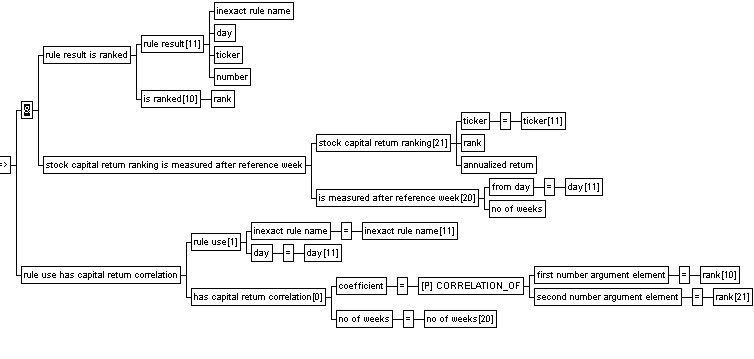

| By correlating the ranking of the rule calls with the actual return (ranking of the return) for each stock, the relative success of the rules in predicting the best stocks can to some degree be measured. | ||||||||||||

|

|

||||||||||||

| The evaluation model in this example

shows the general idea. Actual evaluation models can be made much more

complex by more detailed analysis of the rules, possibly also employing

inexact reasoning rules to determine the performance of the model!

It is sometimes useful to draw an inexact rule tree where each rule might have an associated value found in the knowledge base. This could typically be a value derived in an evaluation sub-model which tells something about each rule (e.g. its effectiveness or relative success). The inexact rule association is in the KB-sentence type can be used for this purpose:

The bike is suitable rule can now be drawn with its associated value 3.2 shown (the domain name and output format data elements are used to obtain the correct display of the value in the tree). The associated parameter is given by the KE as an input to the rule drawing. It makes it possible to have several associated values for each rule.

|